Mitigation Steps

There are several groups which are making moves to avoid some of the flaws outlined above. A few of these tools are worth mentioning, again as broad categories of mitigation, rather than specific tools, as there is a chance that many of these will be surpassed in quality and relevancy within the near future.

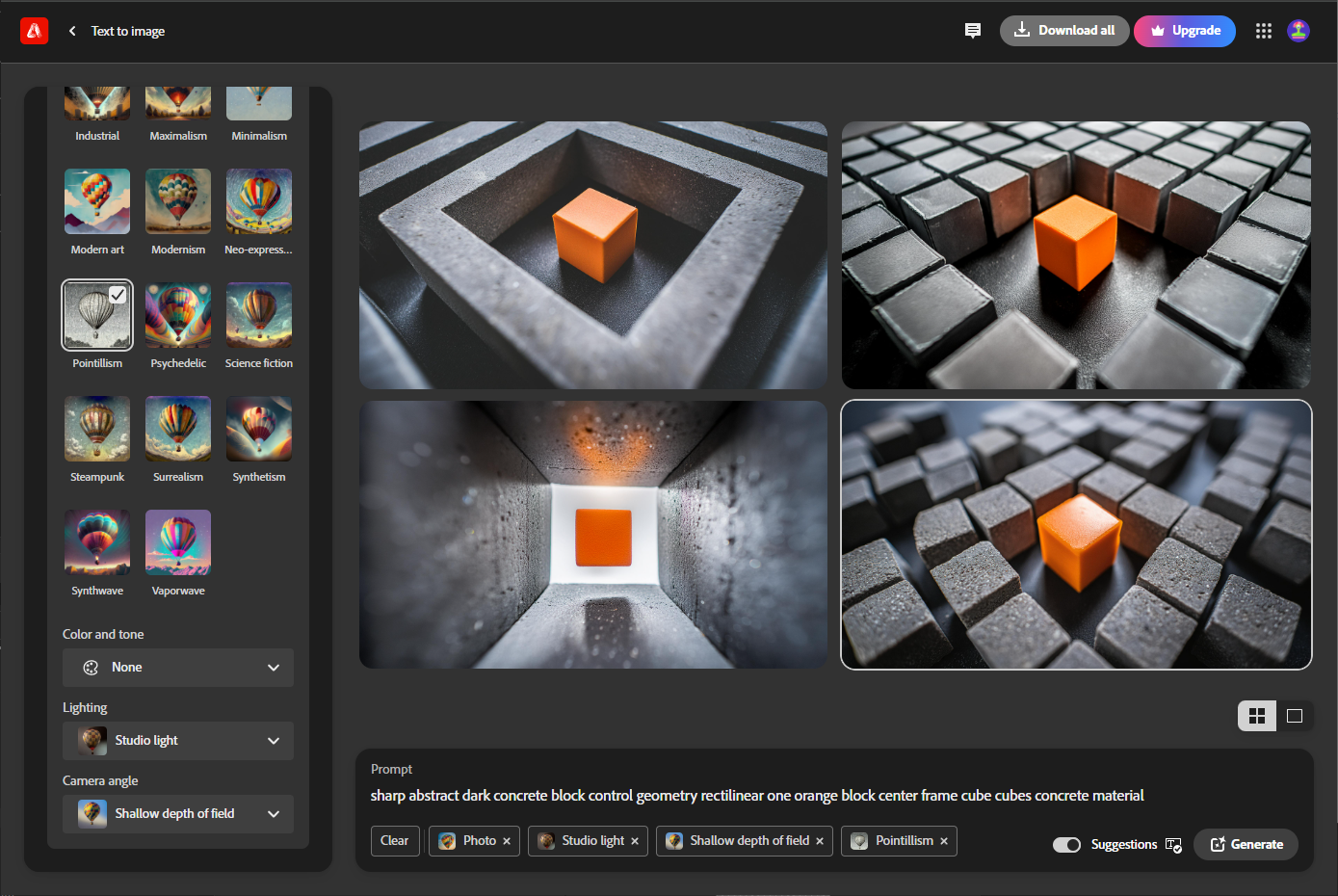

There is a slate of copyright friendly AI tools coming to market. This is the simplest problem to solve in the ethical and legal space of AI: attain the consent of the creators of the source material populating the training set to use that data to train a model. Current models in broad circulation have trained their systems on any data freely available on the internet, and the simple alternative to this is licensing content from creators for use in the training set. This requires a lot of work to ensure a quality output, as so many of these tools get their quality in part from the breadth of their training data. While the issue of consent and licensing is easy to overcome, tools like Adobe Firefly, and Generative AI by Getty Images, are already doing this, the question of compensation is much harder, and at the time of writing no mass market tools are successfully compensating the content creators whose information is being used beyond a one-time licensing agreement.

Many of the problems with representation and bias in current AI tooling are just reflections of the western English dominance of the internet and the content therein. There needs to be efforts made to diversify the training data used on these models and simultaneously implement parallel systems which can check and flag these issues. (United Language Group, 2023)

One example of a system that looks to address concerns like a variant of something covered earlier, constitutional based AI. A constitutional AI system is one that uses reinforcement learning, and instead of human feedback. In such a system one AI tool has its output verified by a second AI tool which was trained on a code of ethics and content policy, or constitution. This system in theory allows the AI tool to run without a human vetting every piece of content it outputs. The current most popular version of this tool is Anthropic’s Claud LLM (Vincent, 2023a).

As a result of all of this work, some companies are addressing AI flaws by developing copyright-friendly tools, obtaining consent from creators for training data use. Examples include Adobe Firefly and Generative AI by Getty Images, though compensating creators remains a challenge. Efforts to diversify training data and implement bias-checking systems are underway to address representation issues. Constitutional-based AI, like Anthropic's Claud LLM, uses reinforcement learning and ethical codes for output verification, reducing reliance on human vetting.

Looking Ahead

In the short-term future, AI is positioned to continue its rapid growth across various fields. Advancements in the underlying technologies are expected to enhance the capabilities of AI systems. Additionally, there is a growing emphasis on responsible AI practices, with a focus on addressing biases, ensuring transparency, and promoting ethical considerations in AI development. The AI community seems divided between a few camps: accelerationists, decelerationists, and AI alignment.

AI accelerationism (Mariushobbhahn & Tilman, 2022) is a concept that suggests accelerating the development and deployment of AI technologies to bring about rapid societal transformation. Proponents believe that accelerating AI progress could lead to positive outcomes such as solving complex problems, increasing efficiency, and unlocking new possibilities. (Jezos & Bayeslord, 2022).

AI decelerationism is a concept that advocates for slowing down the pace of AI development and deployment (Future of Life Institute, 2023). Proponents of AI decelerationism argue for a more cautious and measured approach, emphasizing the need to carefully consider the potential risks, ethical concerns, and societal impact of rapidly advancing AI technologies (Statement on AI Risk | CAIS, n.d.). It underscores the importance of responsible and thoughtful development to address challenges related to safety, ethics, and the broader implications of AI on society. (Aguirre, 2023)

AI alignment (Ji, 2023) refers to the goal of ensuring that AI systems act in accordance with human values, goals, and objectives. It involves designing AI systems in a way that their behavior aligns with what is intended by their creators, preventing unintended and potentially harmful consequences. The challenge lies in developing methods and frameworks that guide AI systems to understand and follow human values, especially as they become more advanced and capable of autonomous decision-making. Achieving AI alignment is crucial for ensuring the ethical and safe deployment of AI technologies in various applications.

No matter which camp someone finds themselves in it is impossible to argue that these tools will continue to become even more integrated into daily life. It is worth pausing to consider that this rapid growth in AI technology, while based on a long rich history, has occurred primarily since 2021. In the last two years as of time of writing the sector has seen unprecedented growth, adoption, and technical advancement. It’s doubtful that this is a linear, or purely growth-based curve of advancement, it is reasonable to assume that the landscape of AI will look very different two years from now.

Conclusion

The point that is worth emphasizing here is that these tools are just that, tools. In a lot of ways an LLM is no different than spell check, and midjourney has parallels to photoshop. Great things can be created in either, but the author has ultimate control. Key to that control should be an understanding of how the tool works and what it can do. No one would seek to paint in word or write the next great novel in photoshop. Knowing what a tool is good for, and what intentions, bias, and decisions went into its creation should directly drive what it is being used for.

When creating with AI tooling, authors, designers, whomever, need to understand that these are unthinking tools that directly reflect what they have been trained on with no critical thought and only basic guardrails. These systems are incredibly complex and technically impressive, but their outputs should always be vetted and reviewed.

My biases likely place this series somewhere between the decelerationist and alignment camp, but the goal nevertheless was education and a discussion of the tools. It is fair to argue that any creation has flaws, both blog posts, and a LLM are subject to bias, factual errors, and omissions.

The concern is that with tools as popular and powerful as ChatGPT and Midjourney the effects of any error, omission, or bias are amplified thousands of times over. This reach has great responsibility, and with an increasingly large segment of the population using and beginning to rely on these tools we owe it to ourselves to be educated and mindful of how they work, what their flaws are and where they are headed. They will be shaping the future of technology for a long time to come, and as they continue to be developed, they must be treated with care, caution, and excitement.